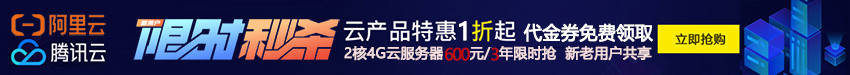

一、架构图

二、prometheus安装

2.1 可选的安装方式

- 二进制安装 # 一般针对于物理机安装

- 容器安装

- helm安装 # 以下三种都是给k8s使用的

- prometheus operator

- kube-prometheus stack # 是一个项目技术栈,包含:prometheus operator、高可用的prometheus、高可用的alertmanager、主机监控node exporter、grafana等

2.2 使用kube-prometheus stack安装,下图是各版本的支持,如果k8s版本较新,就下载个最新的release一般都会支持。

https://github.com/prometheus-operator/kube-prometheus/

2.3 下载对应的安装版本

git clone -b release-0.7 https://github.com/prometheus-operator/kube-prometheus.git

2.4安装CRD(自定义的资源)

# cd kube-prometheus/manifests/

# kubectl create -f setup/

2.5 查看operator的状态

# kubectl get pod -n monitoring | grep operator prometheus-operator-7649c7454f-pkbbl 2/2 Running 0 3m

2.6 按需求修改alertmanager的副本数,默认3个高可用组件

# vim alertmanager-alertmanager.yaml replicas: 1

2.7按需求修改prometheus的副本数,默认是2个

# vim prometheus-prometheus.yaml replicas: 1

2.8 修改镜像,默认的镜像无法直接下载,可以在dockerhub中查找

# cat kube-state-metrics-deployment.yaml | grep image image: quay.io/coreos/kube-state-metrics:v1.9.7 image: quay.io/brancz/kube-rbac-proxy:v0.8.0 image: quay.io/brancz/kube-rbac-proxy:v0.8.0

2.9创建prometheus集群

# kubectl create -f .

2.10 修改prometheus和grafana的web界面为nodeport的访问方式,因为没有配置pvc所以数据不是持久化的。生产环境需要配置两个的持久化存储

# kubectl edit svc -n monitoring prometheus-k8s ports: #在最下面添加type类型 type: NodePort # kubectl edit svc -n monitoring grafana type: NodePort

2.11 配置完成之后就可以通过主机的IP加端口进行访问了

# kubectl get svc -n monitoring | egrep "grafana|prometheus-k8s" grafana NodePort 10.107.73.70 <none> 3000:32351/TCP 1d prometheus-k8s NodePort 10.101.129.206 <none> 9090:32021/TCP 1d

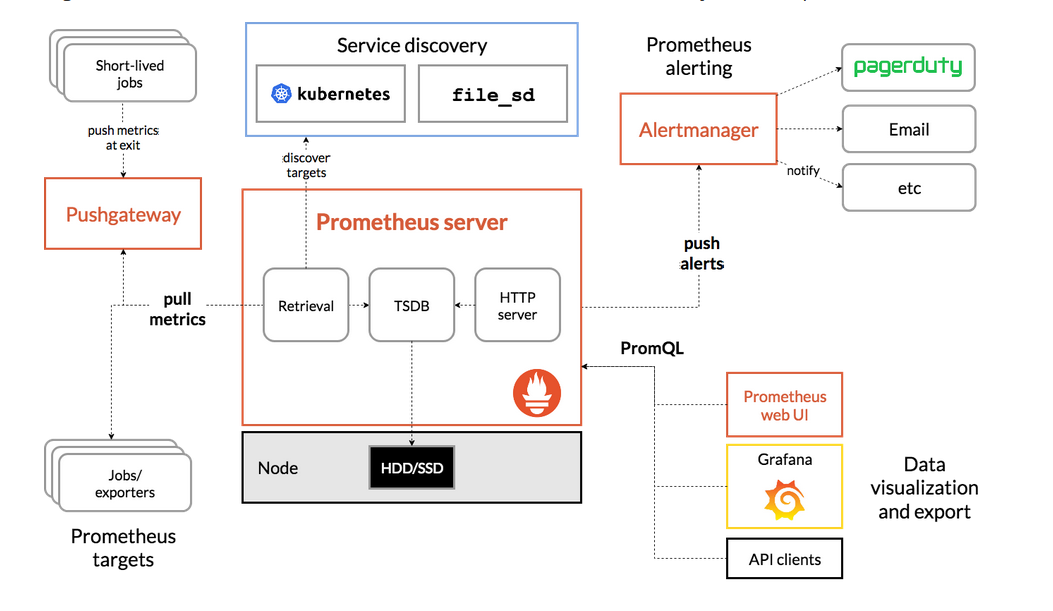

三、什么是ServiceMonitor

二进制安装、容器安装、helm安装通过prometheus.yml加载配置

prometheus operator、kube-prometheus stack通过ServiceMonitor发现监控目标,进行监控。serviceMonitor 是通过对service 获取数据的一种方式。

- promethus-operator可以通过serviceMonitor 自动识别带有某些 label 的service ,并从这些service 获取数据。

- serviceMonitor 也是由promethus-operator 自动发现的。

# kubectl get servicemonitor -n monitoring node-exporter -o yaml apiVersion: monitoring.coreos.com/v1 kind: ServiceMonitor selector: matchLabels: app.kubernetes.io/name: node-exporter # kubectl get svc -n monitoring -l app.kubernetes.io/name=node-exporter NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE node-exporter ClusterIP None <none> 9100/TCP 8d

# kubectl get ep -n monitoring node-exporter -o yaml apiVersion: v1 kind: Endpoints metadata: labels: app.kubernetes.io/name: node-exporter app.kubernetes.io/version: v1.0.1 service.kubernetes.io/headless: "" name: node-exporter namespace: monitoring selfLink: /api/v1/namespaces/monitoring/endpoints/node-exporter subsets: - addresses: - ip: 192.168.0.21 nodeName: k8s-master targetRef: kind: Pod name: node-exporter-96jmq namespace: monitoring resourceVersion: "8821390" uid: ba368321-1c3f-483a-a747-1e1c7b709b65 - ip: 192.168.0.25 nodeName: k8s-node1 targetRef: kind: Pod name: node-exporter-qqzl2 namespace: monitoring resourceVersion: "8821365" uid: 5daf9ff7-c120-4fcc-8412-da243c1224ce ports: - name: https port: 9100 protocol: TCP

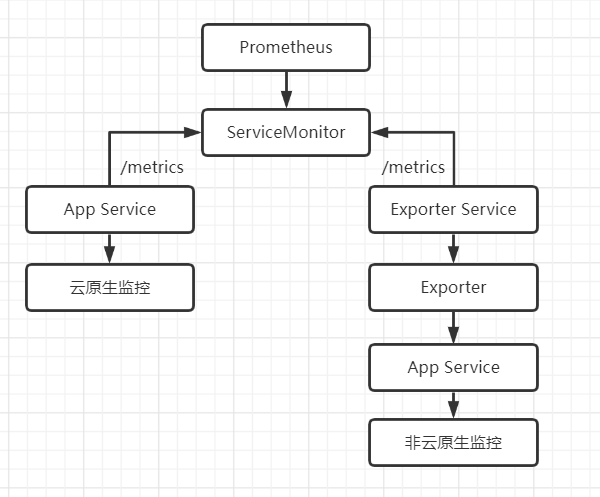

配置讲解

apiVersion: monitoring.coreos.com/v1 kind: ServiceMonitor metadata: name: etcd-k8s namespace: monitoring labels: app: etcd-k8s spec: jobLabel: etcd-k8s endpoints: - interval: 30s port: etcd-port # metrics端口 Service.spec.ports.name scheme: https # metrics接口协议,http或者https tlsConfig: caFile: /etc/prometheus/secrets/etcd-ssl/etcd-ca.pem # 证书路径 (在prometheus pod里路径) certFile: /etc/prometheus/secrets/etcd-ssl/etcd.pem keyFile: /etc/prometheus/secrets/etcd-ssl/etcd-key.pem insecureSkipVerify: true # 关闭证书校验 selector: matchLabels: app: etcd-k8s # 监控目标svc的标签 namespaceSelector: matchNames: - kube-system # 监控目标svc所在的命名空间 # 匹配Kube-system这个命名空间下面具有app=etcd-k8s这个label标签的Serve,job label用于检索job任务名称的标签。由于证书serverName和etcd中签发的证书可能不匹配,

所以添加了insecureSkipVerify=true将不再对服务端的证书进行校验

prometheus的监控流程

四、云原生应用ETCD的监控

4.1 本地测试etcd的metrics接口,我是使用kubeadm安装的集群

# grep -E "key-file|cert-file" /etc/kubernetes/manifests/etcd.yaml - --cert-file=/etc/kubernetes/pki/etcd/server.crt - --key-file=/etc/kubernetes/pki/etcd/server.key # curl -s --cert /etc/kubernetes/pki/etcd/server.crt --key /etc/kubernetes/pki/etcd/server.key https://192.168.0.21:2379/metrics -k | tail -3 promhttp_metric_handler_requests_total{code="200"} 2 promhttp_metric_handler_requests_total{code="500"} 0 promhttp_metric_handler_requests_total{code="503"} 0

4.2 创建etcd的service和endpoints

# cat etcd.yaml apiVersion: v1 kind: Endpoints metadata: labels: app: etcd-k8s name: etcd-k8s namespace: kube-system subsets: - addresses: # etcd节点对应的主机ip,有几台就写几台 - ip: 192.168.0.21 ports: - name: etcd-port port: 2379 # etcd端口 protocol: TCP --- apiVersion: v1 kind: Service metadata: labels: app: etcd-k8s name: etcd-k8s namespace: kube-system spec: ports: - name: etcd-port port: 2379 protocol: TCP targetPort: 2379 type: ClusterIP # kubectl create -f etcd.yaml # kubectl get svc -n kube-system -l app=etcd-k8s # 查找svc的ip,将上面的测试ip换成svc的地址再测试 NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE etcd-k8s ClusterIP 10.110.151.13 <none> 2379/TCP 74s # curl -s --cert /etc/kubernetes/pki/etcd/server.crt --key /etc/kubernetes/pki/etcd/server.key https://10.110.151.13:2379/metrics -k | tail -3 promhttp_metric_handler_requests_total{code="200"} 5 promhttp_metric_handler_requests_total{code="500"} 0 promhttp_metric_handler_requests_total{code="503"} 0

4.3 将etcd的证书创建到secret中,让prometheus进行挂载,因为是prometheus去请求etcd,必须要的prometheus在同一命名空间

kubectl create secret generic etcd-ssl --from-file=/etc/kubernetes/pki/etcd/server.crt --from-file=/etc/kubernetes/pki/etcd/server.key --from-file=/etc/kubernetes/pki/etcd/ca.crt -n monitoring

4.4 将secret挂载到prometheus的pod是

# kubectl edit prometheus k8s -n monitoring replicas: 2 secrets: - etcd-ssl #添加secret名称,保存退出后prometheus的pod会重启 # kubectl get pod -n monitoring | grep prometheus-k8s prometheus-k8s-0 2/2 Running 1 46s prometheus-k8s-1 2/2 Running 1 54s # kubectl exec -it prometheus-k8s-0 -n monitoring -- sh # 查看是否挂载成功 /prometheus $ ls /etc/prometheus/secrets/etcd-ssl/ ca.crt server.crt server.key

4.4 创建ServiceMonitor将service的配置加载到Prometheus

# cat etcd-servicemonitor.yaml apiVersion: monitoring.coreos.com/v1 kind: ServiceMonitor metadata: name: etcd-k8s namespace: monitoring labels: app: etcd-k8s spec: jobLabel: app endpoints: - interval: 30s port: etcd-port # kubectl get svc -n kube-system etcd-k8s -o yaml 中svc的pod名称 scheme: https tlsConfig: caFile: /etc/prometheus/secrets/etcd-ssl/ca.crt certFile: /etc/prometheus/secrets/etcd-ssl/server.crt keyFile: /etc/prometheus/secrets/etcd-ssl/server.key insecureSkipVerify: true # 关闭证书校验 selector: matchLabels: app: etcd-k8s # 跟scv的name保持一致 namespaceSelector: matchNames: - kube-system # 跟svc所在namespace保持一致

# kubectl create -f etcd-servicemonitor.yaml

匹配Kube-system这个命名空间下面具有app=etcd-k8s这个label标签的Serve,job label用于检索job任务名称的标签。由于证书serverName和etcd中签发的证书可能不匹配,所以添加了insecureSkipVerify=true将不再对服务端的证书进行校验

4.5 登录页面查看

4.6 导入grafana 模板

https://grafana.com/grafana/dashboards/3070

五、非云原生的监控exporter

我们使用MySQL并没有部署在k8s内,使用prometheus监控k8s集群外的MySQL

5.1 创建mysql-exporter的deployment获取mysql的监控数据

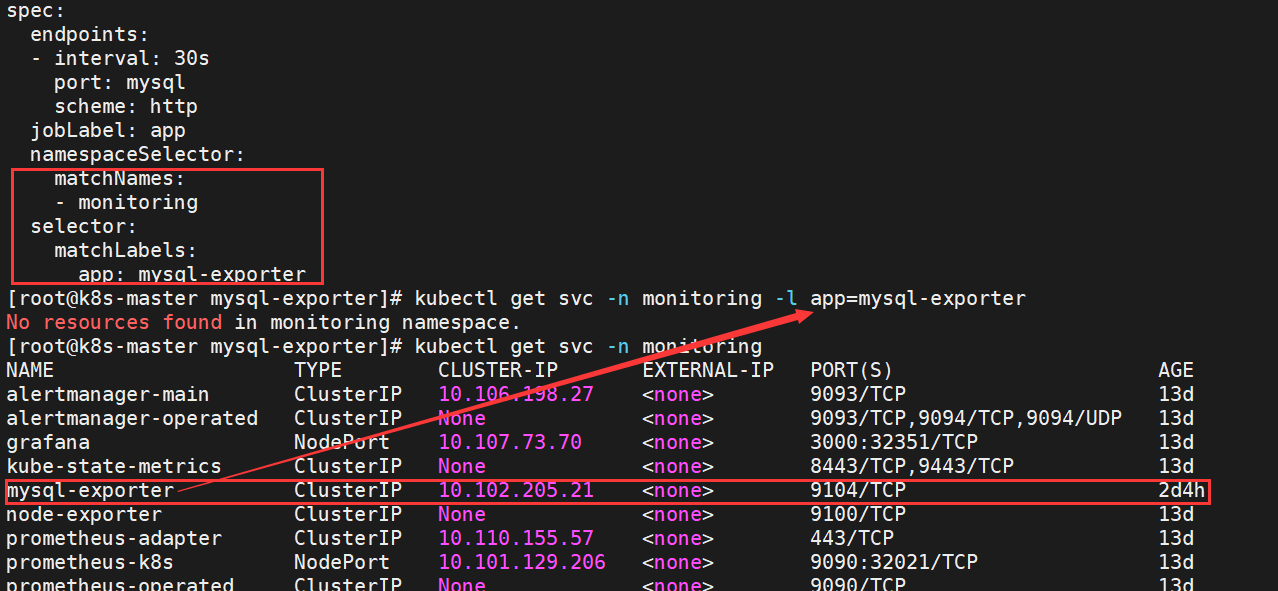

# cat mysql-exporter.yaml apiVersion: apps/v1 kind: Deployment metadata: name: mysql-exporter-deployment namespace: monitoring spec: replicas: 1 selector: matchLabels: app: mysql-exporter template: metadata: labels: app: mysql-exporter spec: containers: - name: mysql-exporter imagePullPolicy: IfNotPresent image: prom/mysqld-exporter env: - name: DATA_SOURCE_NAME value: "exporter:childe12#@(192.168.0.247:3306)/" ports: - containerPort: 9104 resources: requests: cpu: 500m memory: 1024Mi limits: cpu: 1000m memory: 2048Mi --- apiVersion: v1 kind: Service metadata: name: mysql-exporter namespace: monitoring labels: app: mysql-exporter spec: type: ClusterIP selector: app: mysql-exporter ports: - name: mysql port: 9104 targetPort: 9104 protocol: TCP # kubectl create -f mysql-exporter.yaml # kubectl get svc -n monitoring | grep mysql-exporter mysql-exporter ClusterIP 10.102.205.21 <none> 9104/TCP 98m # curl -s 10.102.205.21:9104/metrics | tail -1 # 通过svc的地址能获取到mysql的监控数据即可 promhttp_metric_handler_requests_total{code="503"} 0

5.2 创建 servicemonitor

# cat mysql-servicemonitor.yaml apiVersion: monitoring.coreos.com/v1 kind: ServiceMonitor metadata: name: mysql-exporter namespace: monitoring labels: app: mysql-exporter spec: jobLabel: mysql-monitoring endpoints: - interval: 30s port: mysql # svc的名称 scheme: http selector: matchLabels: app: mysql-exporter # 跟scv的name保持一致 namespaceSelector: matchNames: - monitoring # 跟svc所在namespace保持一致 # kubectl create -f mysql-servicemonitor.yaml

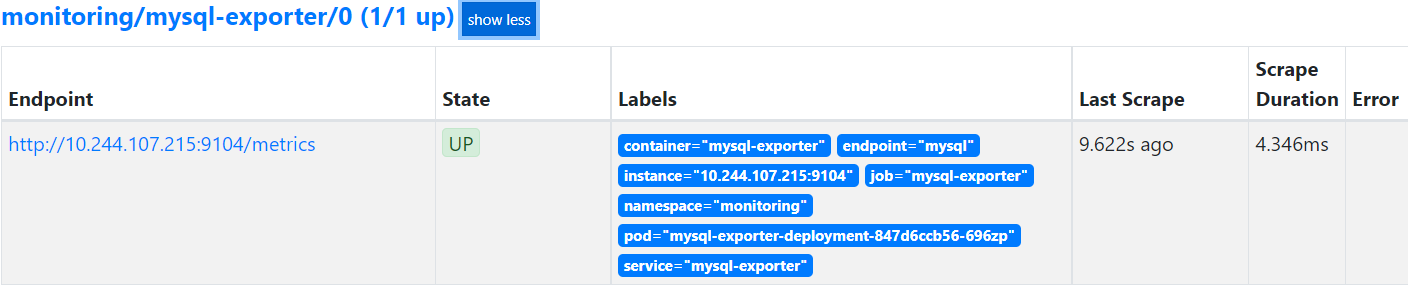

5.3 在prometheus中查看数据

5.4 监控失败排查思路

- 确认ServiceMonitor是否创建成功

- 确认ServiceMonitor标签是否匹配正确

- 确认在Pormetheus中是否生成了相关的配置

- 确认ServiceMonitor是否能匹配到Service(自己当时就没有匹配到标签所以查了好久)

- 确认通过Service是否能够访问/metrics接口

- 确认Service的端口是否和Scheme和ServiceMonitor的端口一致

六、使用静态配置文件配置

touch prometheus-additional.yaml kubectl create secret generic additional-configs - -from-file=prometheus-additional.yaml -n monitoring # kubectl describe secret additional-configs -n moni toring Name: additional-configs Namespace: monitoring Labels: <none> Annotations: <none> Type: Opaque Data ==== prometheus-additional.yaml: 0 bytes # kubectl edit prometheus -n monitoring k8s spec: additionalScrapeConfigs: key: prometheus-additional.yaml name: additional-configs optional: true # 修改配置文件 # cat prometheus-additional.yaml - job_name: 'node' static_configs: - targets: ['192.168.0.26:9100'] # 进行热更新 # kubectl create secret generic additional-configs --from-file=prometheus-additional.yaml --dry-run=client -o yaml | kubectl replace -f - -n monitoring # 验证配置 # kubectl get secret -n monitoring additional-configs -oyaml apiVersion: v1 data: prometheus-additional.yaml: LSBqb2JfbmFtZTogJ25vZGUnCiAgc3RhdGljX2NvbmZpZ3M6CiAgLSB0YXJnZXRzOiBbJzE5Mi4xNjguMC4yNjo5MTAwJ10K kind: Secret # echo "LSBqb2JfbmFtZTogJ25vZGUnCiAgc3RhdGljX2NvbmZpZ3M6CiAgLSB0YXJnZXRzOiBbJzE5Mi4xNjguMC4yNjo5MTAwJ10K" | base64 -d - job_name: 'node' static_configs: - targets: ['192.168.0.26:9100']